In this tutorial, you will learn how to train a bidirectional Long-Short Term Memory network for sentiment analysis using TensorFlow and Keras APIs.

IMDB reviews dataset will be used to train the deep learning model for sentiment analysis using LSTM. Since the reviews are present in the textual form, we will need a recurrent neural network (or LSTM) to memorize the important word occurrence for both the positive/negative reviews.

This tutorial will feature the following tools/libraries to classify images:

- Python

- Jupyter Notebook

- TensorFlow

- Keras

- pandas

- matplotlib

Article Contents

Article Notebook

Code: Sentiment Analysis using LSTM via TensorFlow and Keras

Prepared By: Awais Naeem (awais.naeem@embedded-robotics.com)

Copyrights: www.embedded-robotics.com

Disclaimer: This code can be distributed with the proper mention of the owner’s copyrights

Notebook Link: https://github.com/embedded-robotics/datascience/blob/master/imdb_reviews_LSTM_sentiment_analysis/keras_LSTM_sentiment_analysis.ipynb

IMDB Reviews Dataset

IMDB dataset contains 50k movie reviews in textual form and a classification label of whether the review is deemed ‘positive’ or ‘negative’.

Dataset Link: https://www.kaggle.com/datasets/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews

Input Data: A textual paragraph describing the critique of the movie

Output Labels: ReviewSentiment as ‘positive’ or ‘negative’

Data Reading and Pre-Processing

Let’s first import all python-based libraries necessary to read the data and process it using TensorFlow and Keras API:

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Embedding, Bidirectional

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint

Download the data set from the Kaggle website and place it in the home directory of the project. Now, you can read the dataset using the Pandas read_csv function:

reviews = pd.read_csv('IMDB Dataset.csv')

reviews.head()

Output:

review sentiment

0 One of the other reviewers has mentioned that ... positive

1 A wonderful little production. <br /><br />The... positive

2 I thought this was a wonderful way to spend ti... positive

3 Basically there's a family where a little boy ... negative

4 Petter Mattei's "Love in the Time of Money" is... positive

To build a binary classification model, the sentiment column should be converted to numeric values such that ‘positive’ sentiment is regarded as 1 and ‘negative’ sentiment as 0. We can achieve this easily by modifying the column of pandas DataFrame using np.where():

reviews['sentiment'] = np.where(reviews['sentiment'] == 'positive', 1, 0)

The next thing we need to do is to convert the labels and reviews to NumPy arrays as pre-processing methods favor arrays instead of pandas series:

sentences = reviews['review'].to_numpy()

labels = reviews['sentiment'].to_numpy()

Before any pre-processing, we need to split our dataset into training and test instances so that we can evaluate the accuracy of the trained model using the test dataset. We will use a 75:25 split for training and testing data, respectively:

X_train, X_test, y_train, y_test = train_test_split(sentences, labels, test_size=0.25)

print("Training Data Input Shape: ", X_train.shape)

print("Training Data Output Shape: ", y_train.shape)

print("Testing Data Input Shape: ", X_test.shape)

print("Testing Data Output Shape: ", y_test.shape)

Output:

Training Data Input Shape: (37500,)

Training Data Output Shape: (37500,)

Testing Data Input Shape: (12500,)

Testing Data Output Shape: (12500,)

So, our LSTM model will be trained using 37500 reviews, and later, its accuracy will be tested using the unseen 12500 reviews.

To build a mathematical model, we need to convert textual data (reviews) into numeric values. For most of the NLP tasks, tokenization is performed on the entire text corpus which basically includes all the training data reviews in our scenario.

During tokenization, sentences are tokenized into a set of individual words and then statistical features are calculated for each word such as:

word_counts: Represents the dictionary of words along with the word count in the entire text corpus

word_docs: Represents the dictionary of words depicting the number of documents in the text corpus containing a specific word

word_index: A unique index assigned to a dictionary of words

document_count: Represents the number of documents used for fitting the tokenizer

To perform the tokenization of training data, we need to specify the vocabulary size which indicates the number of words having a maximum frequency count.

vocab_size = 10000

oov_tok = "<OOV>"

tokenizer = Tokenizer(num_words=vocab_size, oov_token=oov_tok)

Here, we will consider only the first 10000 words based on their frequency in the entire training data. Also, we have specified oov_tok as “<OOV>” which will replace any unknown word in the text corpus.

Once we have defined the hyperparameters for Tokenizer(), we need to fit them on the training data using fit_on_texts():

tokenizer.fit_on_texts(X_train)

print("Number of Documents: ", tokenizer.document_count)

print("Number of Words: ", tokenizer.num_words)

Output:

Number of Documents: 37500

Number of Words: 10000

We can also visualize the count of each word in the overall dictionary as well as the number of documents containing a specific word:

tokenizer.word_counts

Output:

OrderedDict([('a', 242147),

('true', 3404),

('hero', 1457),

('of', 217518),

('modern', 1346),

('times', 4672),

('chuck', 233),

('norris', 135),

('has', 24757),

('left', 3127),

('tv', 4246),

('walker', 220),

('rexas', 1),

('ranger', 143),

('and', 242868),

('is', 158393),

('looking', 3828),

('new', 6079),

('steps', 229),

('for', 65652),

('his', 43011),

('artistic', 493),

('career', 1479),

('the', 501267),

('president´s', 2),

...

('sometimes', 1688),

('cry', 596),

('nonetheless', 260),

('seemingly', 544),

...])

tokenizer.word_docs

Output:

defaultdict(int,

{'next': 2326,

'in': 32968,

'plenty': 810,

'judson': 4,

'tv': 3208,

'before': 5355,

'left': 2733,

'this': 33973,

'be': 21174,

'teachs': 1,

'action': 3418,

'career': 1285,

'like': 17395,

'walker': 145,

'world': 4020,

'old': 5020,

'br': 21885,

'usa': 232,

'rexas': 1,

'surprise': 956,

'heroism': 41,

'actors': 5368,

'a': 36226,

'young': 3926,

...

'nonetheless': 254,

'began': 442,

'seemingly': 514,

'erupted': 8,

...})

Once we have tokenized the entire text corpus, we need to convert each textual review into a numerical sequence using the fitted tokenizer:

train_sequences = tokenizer.texts_to_sequences(X_train)

print(train_sequences[0])

Output:

[4, 285, 633, 5, 686, 217, 2995, 4565, 46, 313, 239, 3126, 1, 4369, 3, 7, 257, 168, 3030, 17, 25, 1637, 623, 2, 1, 129, 7, 2, 329, 16, 5, 4, 743, 239, 199, 18, 4565, 9, 2, 417, 212, 3, 18, 192, 154, 38, 1993, 1, 39, 1, 8534, 28, 5, 2, 192, 5470, 5, 3126, 2, 157, 1226, 1, 6, 192, 1, 8889, 28, 5, 2, 89, 965, 1407, 5, 2, 1, 129, 9, 12, 1, 4, 345, 9, 2, 5063, 46, 3659, 183, 858, 17, 25, 1, 5, 7818, 9, 2771, 1915, 160, 2, 1644, 5399, 1648, 2195, 4565, 500, 5, 2995, 7, 2, 165, 2, 16, 7, 1171, 4, 50, 204, 158, 998, 5, 1880, 3, 1, 2995, 4565, 26, 108, 2388, 17, 2, 743, 1, 5, 3041, 25, 367, 435, 79, 27, 2, 944, 8, 8]

Each review in the training data is now converted into a numerical sequence which can be fed into a mathematical model for further training purposes.

However, the text in each review has different lengths of words and will produce a diverse numeric sequence length from the other reviews.

So, we need to limit the sequence lengths to a constant value for each review. We will specify a nominal sequence length of 200 for each review.

The numerical sequences having lengths greater than 200 will be truncated at the end, whereas the ones having lengths smaller than 200 will be padded with zeros at the end. We can now specify the sequence padding for numerical sequences of textual reviews:

sequence_length = 200

train_padded = pad_sequences(train_sequences, maxlen=sequence_length, padding='post', truncating='post')

Once we have done pre-processing on the train data, we need to repeat the same steps for the test data:

test_sequences = tokenizer.texts_to_sequences(X_test)

test_padded = pad_sequences(test_sequences, maxlen=sequence_length, padding='post', truncating='post')

With tokenization, sequence conversion, and padding, we are now done with the pre-processing of the textual reviews.

Let’s now advance to building the actual LSTM model for sentiment analysis…

Sentiment Analysis using LSTM

LSTM model consists of multiple layers, each one taking input from the previous one and advancing output to the next one. The first layer takes the numerical sequences as input, and the last layer gives the prediction label as the output.

We can define a sequential () model to embed the layers of LSTM. Later, we can add as many layers as we want in this sequential model:

model = Sequential()

Firstly, we will add an embedding layer which will convert each word into a dense vector of embedding dimensions specified in the hyperparameters of the layer:

embedding_dim = 16

model.add(Embedding(vocab_size, embedding_dim, input_length=sequence_length))

Here we have specified the vocabulary size as well as the sequence length of each review. Next, we need to specify the Bidirectional() layer and the LSTM layer with a specified unit size in the LSTM layer:

lstm_out = 32

model.add(Bidirectional(LSTM(lstm_out)))

One advantage of using bidirectional LSTM is that it remembers output from past to future as well as from future to past. This technique can result in more robust models for time series analysis.

Next, we will specify a fully connected layer having 10 units and ‘relu’ activation:

model.add(Dense(10, activation='relu'))

Finally, we will add an output layer having only 1 unit and ‘sigmoid’ activation. This layer will output the probability that an input belongs to 1 (or positive) using the sigmoid filter.

model.add(Dense(1, activation='sigmoid'))

Now we need to compile the model such that the model optimizes the `binary_crossentropy` during training. The loss value is the parameter that the ‘adam’ optimizer will minimize by tweaking the weights during the training phase.

Essentially, the ‘adam’ optimizer tries to find the global minima for the loss value among all the local minima by optimizing the trainable parameters. Moreover, the ‘accuracy’ of the model will be reported for each training batch/epoch to gauge the convergence of the neural network.

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

The summary of the LSTM model can be visualized as follows:

print(model.summary())

Output:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 200, 16) 160000

bidirectional (Bidirectiona (None, 64) 12544

l)

dense (Dense) (None, 10) 650

dense_1 (Dense) (None, 1) 11

=================================================================

Total params: 173,205

Trainable params: 173,205

Non-trainable params: 0

_________________________________________________________________

None

Before the training, we need to specify the number of epochs for which the network needs to be trained. Obviously, we are not sure about the magic number and we tend to take an educated guess.

Let’s just say that we specified the number of epochs to be 10 whereas our model was converged to minimum validation loss after 2 epochs. The remaining 8 epochs will only tend to overfit the model on the training data. So, how will we avoid this situation?

It’s simple… You specify the callback for EarlyStopping(), which will halt the model training after the model fails to minimize the validation loss value after the stated no. of epochs in the callback parameters.

checkpoint_filepath = os.getcwd()

model_checkpoint_callback = ModelCheckpoint(filepath=checkpoint_filepath, save_weights_only=False, monitor='val_loss', mode='min', save_best_only=True)

callbacks = [EarlyStopping(patience=2), model_checkpoint_callback]

Along with the callback for EarlyStopping(), you can specify the ModelCheckpoint() to monitor the loss after each epoch and save the best model in terms of validation loss.

Now, we will fit our model on the training data for a maximum of 10 epochs. Since we have specified the callback to monitor the loss on the validation dataset, we need to specify the validation data as well. If the validation loss of the model is not minimized for two consecutive epochs, the training of the model will halt as specified in the callback.

history = model.fit(train_padded, y_train, epochs=10, validation_data=(test_padded, y_test), callbacks=callbacks)

Output:

Epoch 1/10

1172/1172 [==============================] - ETA: 0s - loss: 0.2366 - accuracy: 0.9090

1172/1172 [==============================] - 348s 297ms/step - loss: 0.2366 - accuracy: 0.9090 - val_loss: 0.3293 - val_accuracy: 0.8581

Epoch 2/10

1172/1172 [==============================] - 220s 188ms/step - loss: 0.2044 - accuracy: 0.9228 - val_loss: 0.3471 - val_accuracy: 0.8611

Epoch 3/10

1172/1172 [==============================] - 205s 175ms/step - loss: 0.1779 - accuracy: 0.9325 - val_loss: 0.3807 - val_accuracy: 0.8478

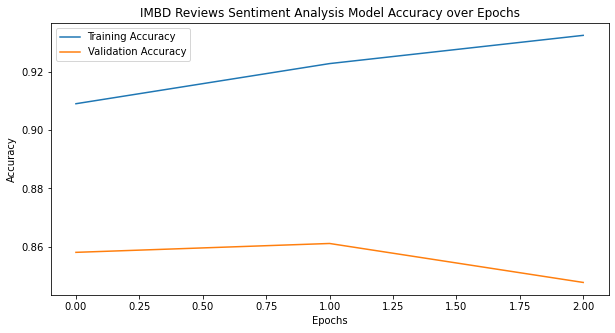

After the model training, it was observed that the model training was halted after 3 epochs because the validation loss did not improve after the first epoch.

Since we saved the model params in the ‘history’ variable, we can visualize the training/testing data loss and accuracy:

metrics_df = pd.DataFrame(history.history)

print(metrics_df)

Output:

loss accuracy val_loss val_accuracy

0 0.236580 0.90904 0.329251 0.85808

1 0.204433 0.92280 0.347143 0.86112

2 0.177877 0.93248 0.380667 0.84776

We can also visualize the loss and accuracy for training/testing data over the number of epochs using matplotlib:

plt.figure(figsize=(10,5))

plt.plot(metrics_df.index, metrics_df.loss)

plt.plot(metrics_df.index, metrics_df.val_loss)

plt.title('IMBD Reviews Sentiment Analysis Model Loss over Epochs')

plt.xlabel('Epochs')

plt.ylabel('Binary Crossentropy')

plt.legend(['Training Loss', 'Validation Loss'])

plt.show()

plt.figure(figsize=(10,5))

plt.plot(metrics_df.index, metrics_df.accuracy)

plt.plot(metrics_df.index, metrics_df.val_accuracy)

plt.title('IMBD Reviews Sentiment Analysis Model Accuracy over Epochs')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(['Training Accuracy', 'Validation Accuracy'])

plt.show()

So, we have got 86% validation accuracy on the IMDB review data set by training a simpler bidirectional LSTM network. This accuracy could further be improved by using back-to-back LSTM layers or by using an increased word dictionary, which will be implemented in future articles.

Conclusion

In this tutorial, we trained LSTM models for binary sentiment classification of the IMDB review dataset using TensorFlow and Keras API. A custom neural network architecture was built for the LSTM model and then trained using the training IMDB reviews. A call back was used to halt the model training if the validation loss was not minimized for two consecutive epochs during the training phase.

The model was able to categorize the sentiments in the test reviews to be either ‘positive’ or ‘negative’ with an approximate accuracy of 86%. These accuracy values can further be improved by using a different neural network architecture and/or data pre-processing and parameters augmentation.

He is the owner and founder of Embedded Robotics and a health based start-up called Nema Loss. He is very enthusiastic and passionate about Business Development, Fitness, and Technology. Read more about his struggles, and how he went from being called a Weak Electrical Engineer to founder of Embedded Robotics.

How do we use this model to test the training data?